rustc_codegen_ssa: use bitcasts instead of type punning for scalar transmutes.

This specifically helps with `f32` <-> `u32` (`from_bits`, `to_bits`) in Rust-GPU (`rustc_codegen_spirv`), where (AFAIK) we don't yet have enough infrastructure to turn type punning memory accesses into SSA bitcasts.

(There may be more instances, but the one I've seen myself is `f32::signum` from `num-traits` inspecting e.g. the sign bit)

Sadly I've had to make an exception for `transmute`s between pointers and non-pointers, as LLVM disallows using `bitcast` for them.

r? `@nagisa` cc `@khyperia`

Fixes multiple issue with counters, with simplification

Includes a change to the implicit else span in ast_lowering, so coverage

of the implicit else no longer spans the `then` block.

Adds coverage for unused closures and async function bodies.

Fixes: #78542

Adding unreachable regions for known MIR missing from coverage map

Cleaned up PR commits, and removed link-dead-code requirement and tests

Coverage no longer depends on Issue #76038 (`-C link-dead-code` is

no longer needed or enforced, so MSVC can use the same tests as

Linux and MacOS now)

Restrict adding unreachable regions to covered files

Improved the code that adds coverage for uncalled functions (with MIR

but not-codegenned) to avoid generating coverage in files not already

included in the files with covered functions.

Resolved last known issue requiring --emit llvm-ir workaround

Fixed bugs in how unreachable code spans were added.

Warn if `dsymutil` returns an error code

This checks the error code returned by `dsymutil` and warns if it failed. It

also provides the stdout and stderr logs from `dsymutil`, similar to the native

linker step.

I tried to think of ways to test this change, but so far I haven't found a good way, as you'd likely need to inject some nonsensical args into `dsymutil` to induce failure, which feels too artificial to me. Also, https://github.com/rust-lang/rust/issues/79361 suggests Rust is on the verge of disabling `dsymutil` by default, so perhaps it's okay for this change to be untested. In any case, I'm happy to add a test if someone sees a good approach.

Fixes https://github.com/rust-lang/rust/issues/78770

Stop adding '*' at the end of slice and str typenames for MSVC case

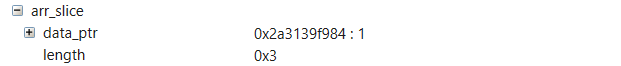

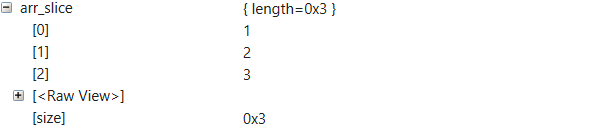

When computing debug info for MSVC debuggers, Rust compiler emits C++ style type names for compatibility with .natvis visualizers. All Ref types are treated as equivalences of C++ pointers in this process, and, as a result, their type names end with a '\*'. Since Slice and Str are treated as Ref by the compiler, their type names also end with a '\*'. This causes the .natvis engine for WinDbg fails to display data of Slice and Str objects. We addressed this problem simply by removing the '*' at the end of type names for Slice and Str types.

Debug info in WinDbg before the fix:

Debug info in WinDbg after the fix:

This change has also been tested with debuggers for Visual Studio, VS Code C++ and VS Code LLDB to make sure that it does not affect the behavior of other kinds of debugger.

This checks the error code returned by `dsymutil` and warns if it failed. It

also provides the stdout and stderr logs from `dsymutil`, similar to the native

linker step.

Fixes https://github.com/rust-lang/rust/issues/78770

Properly handle attributes on statements

We now collect tokens for the underlying node wrapped by `StmtKind`

nstead of storing tokens directly in `Stmt`.

`LazyTokenStream` now supports capturing a trailing semicolon after it

is initially constructed. This allows us to avoid refactoring statement

parsing to wrap the parsing of the semicolon in `parse_tokens`.

Attributes on item statements

(e.g. `fn foo() { #[bar] struct MyStruct; }`) are now treated as

item attributes, not statement attributes, which is consistent with how

we handle attributes on other kinds of statements. The feature-gating

code is adjusted so that proc-macro attributes are still allowed on item

statements on stable.

Two built-in macros (`#[global_allocator]` and `#[test]`) needed to be

adjusted to support being passed `Annotatable::Stmt`.

Add support for Arm64 Catalyst on ARM Macs

This is an iteration on https://github.com/rust-lang/rust/pull/63467 which was merged a while ago. In the aforementioned PR, I added support for the `X86_64-apple-ios-macabi` target triple, which is Catalyst, iOS apps running on macOS.

Very soon, Apple will launch ARM64 based Macs which will introduce `aarch64_apple_darwin.rs`, macOS apps using the Darwin ABI running on ARM. This PR adds support for Catalyst apps on ARM Macs: iOS apps compiled for the darwin ABI.

I don't have access to a Apple Developer Transition Kit (DTK), so I can't really test if the generated binaries work correctly. I'm vaguely hopeful that somebody with access to a DTK could give this a spin.

Updated the list of white-listed target features for x86

This PR both adds in-source documentation on what to look out for when adding a new (X86) feature set and [adds all that are detectable at run-time in Rust stable as of 1.27.0](https://github.com/rust-lang/stdarch/blob/master/crates/std_detect/src/detect/arch/x86.rs).

This should only enable the use of the corresponding LLVM intrinsics.

Actual intrinsics need to be added separately in rust-lang/stdarch.

It also re-orders the run-time-detect test statements to be more consistent

with the actual list of intrinsics whitelisted and removes underscores not present

in the actual names (which might be mistaken as being part of the name)

The reference for LLVM's feature names used is [this file](https://github.com/llvm/llvm-project/blob/master/llvm/include/llvm/Support/X86TargetParser.def).

This PR was motivated as the compiler end's part for allowing #67329 to be adressed over on rust-lang/stdarch

rustc_target: Further cleanup use of target options

Follow up to https://github.com/rust-lang/rust/pull/77729.

Implements items 2 and 4 from the list in https://github.com/rust-lang/rust/pull/77729#issue-500228243.

The first commit collapses uses of `target.options.foo` into `target.foo`.

The second commit renames some target options to avoid tautology:

`target.target_endian` -> `target.endian`

`target.target_c_int_width` -> `target.c_int_width`

`target.target_os` -> `target.os`

`target.target_env` -> `target.env`

`target.target_vendor` -> `target.vendor`

`target.target_family` -> `target.os_family`

`target.target_mcount` -> `target.mcount`

r? `@Mark-Simulacrum`

Monomorphize a type argument of size-of operation during codegen

This wasn't necessary until MIR inliner started to consider drop glue as

a candidate for inlining; introducing for the first time a generic use

of size-of operation.

No test at this point since this only happens with a custom inlining

threshold.

inliner: Use substs_for_mir_body

Changes from 68965 extended the kind of instances that are being

inlined. For some of those, the `instance_mir` returns a MIR body that

is already expressed in terms of the types found in substitution array,

and doesn't need further substitution.

Use `substs_for_mir_body` to take that into account.

Resolves#78529.

Resolves#78560.

with an eye on merging `TargetOptions` into `Target`.

`TargetOptions` as a separate structure is mostly an implementation detail of `Target` construction, all its fields logically belong to `Target` and available from `Target` through `Deref` impls.

This wasn't necessary until MIR inliner started to consider drop glue as

a candidate for inlining; introducing for the first time a generic use

of size-of operation.

No test at this point since this only happens with a custom inlining

threshold.

Implementing the Graph traits for the BasicCoverageBlock

graph.

optimized replacement of counters with expressions plus new BCB graphviz

* Avoid adding coverage to unreachable blocks.

* Special case for Goto at the end of the body. Make it non-reportable.

Improved debugging and formatting options (from env)

Don't automatically add counters to BCBs without CoverageSpans. They may

still get counters but only if there are dependencies from

other BCBs that have spans, I think.

Make CodeRegions optional for Counters too. It is

possible to inject counters (`llvm.instrprof.increment` intrinsic calls

without corresponding code regions in the coverage map. An expression

can still uses these counter values.

Refactored instrument_coverage.rs -> instrument_coverage/mod.rs, and

then broke up the mod into multiple files.

Compiling with coverage, with the expression optimization, works on

the json5format crate and its dependencies.

Refactored debug features from mod.rs to debug.rs

Changes from 68965 extended the kind of instances that are being

inlined. For some of those, the `instance_mir` returns a MIR body that

is already expressed in terms of the types found in substitution array,

and doesn't need further substitution.

Use `substs_for_mir_body` to take that into account.

foreign_modules query hash table lookups

When compiling a large monolithic crate we're seeing huge times in the `foreign_modules` query due to repeated iteration over foreign modules (in order to find a module by its id). This implements hash table lookups so that which massively reduces time spent in that query in this particular case. We'll need to see if the overhead of creating the hash table has a negative impact on performance in more normal compilation scenarios.

I'm working with `@wesleywiser` on this.

Add compiler support for LLVM's x86_64 ERMSB feature

This change is needed for compiler-builtins to check for this feature

when implementing memcpy/memset. See:

https://github.com/rust-lang/compiler-builtins/pull/365

Without this change, the following code compiles, but does nothing:

```rust

#[cfg(target_feature = "ermsb")]

pub unsafe fn ermsb_memcpy() { ... }

```

The change just does compile-time detection. I think that runtime

detection will have to come in a follow-up CL to std-detect.

Like all the CPU feature flags, this just references #44839

Signed-off-by: Joe Richey <joerichey@google.com>

rustc_mir: track inlined callees in SourceScopeData.

We now record which MIR scopes are the roots of *other* (inlined) functions's scope trees, which allows us to generate the correct debuginfo in codegen, similar to what LLVM inlining generates.

This PR makes the `ui` test `backtrace-debuginfo` pass, if the MIR inliner is turned on by default.

Also, `#[track_caller]` is now correct in the face of MIR inlining (cc `@anp).`

Fixes#76997.

r? `@rust-lang/wg-mir-opt`

This change is needed for compiler-builtins to check for this feature

when implementing memcpy/memset. See:

https://github.com/rust-lang/compiler-builtins/pull/365

The change just does compile-time detection. I think that runtime

detection will have to come in a follow-up CL to std-detect.

Like all the CPU feature flags, this just references #44839

Signed-off-by: Joe Richey <joerichey@google.com>

Updated the added documentation in llvm_util.rs to note which copies of LLVM need to be inspected.

Removed avx512bf16 and avx512vp2intersect because they are unsupported before LLVM 9 with the build with external LLVM 8 being supported

Re-introduced detection testing previously removed for un-requestable features tsc and mmx

This PR both adds in-source documentation on what to look out for

when adding a new (X86) feature set and adds all that are detectable at run-time in Rust stable

as of 1.27.0.

This should only enable the use of the corresponding LLVM intrinsics.

Actual intrinsics need to be added separately in rust-lang/stdarch.

It also re-orders the run-time-detect test statements to be more consistent

with the actual list of intrinsics whitelisted and removes underscores not present

in the actual names (which might be mistaken as being part of the name)

Addresses Issue #78286

Libraries compiled with coverage and linked with out enabling coverage

would fail when attempting to add the library's coverage statements to

the codegen coverage context (None).

Now, if coverage statements are encountered while compiling / linking

with `-Z instrument-coverage` disabled, codegen will *not* attempt to

add code regions to a coverage map, and it will not inject the LLVM

instrprof_increment intrinsic calls.