interpret: rename Tag/PointerTag to Prov/Provenance

We were pretty inconsistent with calling this the "tag" vs the "provenance" of the pointer; I think we should consistently call it "provenance".

r? `@oli-obk`

Let's avoid using two different terms for the same thing -- let's just call it "provenance" everywhere.

In Miri, provenance consists of an AllocId and an SbTag (Stacked Borrows tag), which made this even more confusing.

interpret: make some large types not Copy

Also remove some unused trait impls (mostly HashStable).

This didn't find any unnecessary copies that I managed to avoid, but it might still be better to require explicit clone for these types? Not sure.

r? `@oli-obk`

Use constant eval to do strict mem::uninit/zeroed validity checks

I'm not sure about the code organisation here, I just dumped the check in rustc_const_eval at the root. Not hard to move it elsewhere, in any case.

Also, this means cranelift codegen intrinsics lose the strict checks, since they don't seem to depend on rustc_const_eval, and I didn't see a point in keeping around two copies.

I also left comments in the is_zero_valid methods about "uhhh help how do i do this", those apply to both methods equally.

Also rustc_codegen_ssa now depends on rustc_const_eval... is this okay?

Pinging `@RalfJung` since you were the one who mentioned this to me, so I'm assuming you're interested.

Haven't had a chance to run full tests on this since it's really warm, and it's 1AM, I'll check out any failures/comments in the morning :)

interpret/visitor: support visiting with a PlaceTy

Finally we can visit a `PlaceTy` in a way that will only do `force_allocation` when needed ti visit a field. :)

r? `@oli-obk`

interpret: get rid of MemPlaceMeta::Poison

This is achieved by refactoring the projection code (`{mplace,place,operand}_{downcast,field,index,...}`) so that we no longer need to call `assert_mem_place` in the operand handling.

Pull Derefer before ElaborateDrops

_Follow up work to #97025#96549#96116#95887 #95649_

This moves `Derefer` before `ElaborateDrops` and creates a new `Rvalue` called `VirtualRef` that allows us to bypass many constraints for `DerefTemp`.

r? `@oli-obk`

interpret: refactor projection handling code

Moves our projection handling code into a common file, and avoids the use of a

general mplace-based fallback function by have more specialized implementations.

mplace_index (and the other slice-related functions) could be more efficient by

copy-pasting the body of operand_index. Or we could do some trait magic to share

the code between them. But for now this is probably fine.

This is the common part of https://github.com/rust-lang/rust/pull/99013 and https://github.com/rust-lang/rust/pull/99097. I am seeing some strange perf results so this probably should be its own change so we know which diff caused which perf changes...

r? `@oli-obk`

Moves our projection handling code into a common file, and avoids the use of a

general mplace-based fallback function by have more specialized implementations.

mplace_index (and the other slice-related functions) could be more efficient by

copy-pasting the body of operand_index. Or we could do some trait magic to share

the code between them. But for now this is probably fine.

Implement `SourceMap::is_span_accessible`

This patch adds `SourceMap::is_span_accessible` and replaces `span_to_snippet(span).is_ok()` and `span_to_snippet(span).is_err()` with it. This removes a `&str` to `String` conversion.

don't allow ZST in ScalarInt

There are several indications that we should not ZST as a ScalarInt:

- We had two ways to have ZST valtrees, either an empty `Branch` or a `Leaf` with a ZST in it.

`ValTree::zst()` used the former, but the latter could possibly arise as well.

- Likewise, the interpreter had `Immediate::Uninit` and `Immediate::Scalar(Scalar::ZST)`.

- LLVM codegen already had to special-case ZST ScalarInt.

So I propose we stop using ScalarInt to represent ZST (which are clearly not integers). Instead, we can add new ZST variants to those types that did not have other variants which could be used for this purpose.

Based on https://github.com/rust-lang/rust/pull/98831. Only the commits starting from "don't allow ZST in ScalarInt" are new.

r? `@oli-obk`

There are several indications that we should not ZST as a ScalarInt:

- We had two ways to have ZST valtrees, either an empty `Branch` or a `Leaf` with a ZST in it.

`ValTree::zst()` used the former, but the latter could possibly arise as well.

- Likewise, the interpreter had `Immediate::Uninit` and `Immediate::Scalar(Scalar::ZST)`.

- LLVM codegen already had to special-case ZST ScalarInt.

So instead add new ZST variants to those types that did not have other variants

which could be used for this purpose.

Clarify MIR semantics of storage statements

Seems worthwhile to start closing out some of the less controversial open questions about MIR semantics. Hopefully this is fairly non-controversial - it's what we implement already, and I see no reason to do anything more restrictive. cc ``@tmiasko`` who commented on this when it was discussed in the original PR that added these docs.

interpret: use AllocRange in UninitByteAccess

also use nice new format string syntax in `interpret/error.rs`, and use the `#` flag to add `0x` prefixes where applicable.

r? ``@oli-obk``

Make MIR basic blocks field public

This makes it possible to mutably borrow different fields of the MIR

body without resorting to methods like `basic_blocks_local_decls_mut_and_var_debug_info`.

To preserve validity of control flow graph caches in the presence of

modifications, a new struct `BasicBlocks` wraps together basic blocks

and control flow graph caches.

The `BasicBlocks` dereferences to `IndexVec<BasicBlock, BasicBlockData>`.

On the other hand a mutable access requires explicit `as_mut()` call.

This makes it possible to mutably borrow different fields of the MIR

body without resorting to methods like `basic_blocks_local_decls_mut_and_var_debug_info`.

To preserve validity of control flow graph caches in the presence of

modifications, a new struct `BasicBlocks` wraps together basic blocks

and control flow graph caches.

The `BasicBlocks` dereferences to `IndexVec<BasicBlock, BasicBlockData>`.

On the other hand a mutable access requires explicit `as_mut()` call.

interpret: remove support for unsized_locals

I added support for unsized_locals in https://github.com/rust-lang/rust/pull/59780 but the current implementation is a crude hack and IMO definitely not the right way to have unsized locals in MIR. It also [causes problems](https://rust-lang.zulipchat.com/#narrow/stream/146212-t-compiler.2Fconst-eval/topic/Missing.20Layout.20Check.20in.20.60interpret.2Foperand.2Ers.60.3F). and what codegen does is unsound and has been for years since clearly nobody cares (so I hope nobody actually relies on that implementation and I'll be happy if Miri ensures they do not). I think if we want to have unsized locals in Miri/MIR we should add them properly, either by having a `StorageLive` that takes metadata or by having an `alloca` that returns a pointer (making the ptr indirection explicit) or something like that.

So, this PR removes the `LocalValue::Unallocated` hack. It adds `Immediate::Uninit`, for several reasons:

- This lets us still do fairly little work in `push_stack_frame`, in particular we do not actually have to create any allocations.

- If/when I remove `ScalarMaybeUninit`, we will need something like this to have an "optimized" representation of uninitialized locals. Without this we'd have to put uninitialized integers into the heap!

- const-prop needs some way to indicate "I don't know the value of this local'; it used to use `LocalValue::Unallocated` for that, now it can use `Immediate::Uninit`.

There is still a fundamental difference between `LocalValue::Unallocated` and `Immediate::Uninit`: the latter is considered a regular local that you can read from and write to, it just has a more optimized representation when compared with an actual `Allocation` that is fully uninit. In contrast, `LocalValue::Unallocated` had this really odd behavior where you would write to it but not read from it. (This is in fact what caused the problems mentioned above.)

While at it I also did two drive-by cleanups/improvements:

- In `pop_stack_frame`, do the return value copying and local deallocation while the frame is still on the stack. This leads to better error locations being reported. The old errors were [sometimes rather confusing](https://rust-lang.zulipchat.com/#narrow/stream/269128-miri/topic/Cron.20Job.20Failure.202022-06-24/near/287445522).

- Deduplicate `copy_op` and `copy_op_transmute`.

r? `@oli-obk`

Operand::Uninit is an *allocated* operand that is fully uninitialized.

This lets us lazily allocate the actual backing store of *all* locals (no matter their ABI).

I also reordered things in pop_stack_frame at the same time.

I should probably have made that a separate commit...

Change enum->int casts to not go through MIR casts.

follow-up to https://github.com/rust-lang/rust/pull/96814

this simplifies all backends and even gives LLVM more information about the return value of `Rvalue::Discriminant`, enabling optimizations in more cases.

fix interpreter validity check on Box

Follow-up to https://github.com/rust-lang/rust/pull/98554: avoid walking over parts of the value twice.

And then move all that logic into the general visitor so not each visitor implementation has to deal with it...

Interpret: AllocRange Debug impl, and use it more consistently

The two commits are pretty independent but it did not seem worth having two PRs for them.

r? ``@oli-obk``

interpret: don't rely on ScalarPair for overflowed arithmetic

This is for https://github.com/rust-lang/rust/pull/97861.

Cc `@eddyb`

I would like to avoid making this depend on `dest.layout.abi` to avoid a branch that we are not usually covering both sides of. Though OTOH this seems like fairly straight-forward code. But let's benchmark this option first to see how bad that extra `force_allocation` really is.

CTFE interning: don't walk allocations that don't need it

The interning of const allocations visits the mplace looking for references to intern. Walking big aggregates like big static arrays can be costly, so we only do it if the allocation we're interning contains references or interior mutability.

Walking ZSTs was avoided before, and this optimization is now applied to cases where there are no references/relocations either.

---

While initially looking at this in the context of #93215, I've been testing with smaller allocations than the 16GB one in that issue, and with different init/uninit patterns (esp. via padding).

In that example, by default, `eval_to_allocation_raw` is the heaviest query followed by `incr_comp_serialize_result_cache`. So I'll show numbers when incremental compilation is disabled, to focus on the const allocations themselves at 95% of the compilation time, at bigger array sizes on these minimal examples like `static ARRAY: [u64; LEN] = [0; LEN];`.

That is a close construction to parts of the `ctfe-stress-test-5` benchmark, which has const allocations in the megabytes, while most crates usually have way smaller ones. This PR will have the most impact in these situations, as the walk during the interning starts to dominate the runtime.

Unicode crates (some of which are present in our benchmarks) like `ucd`, `encoding_rs`, etc come to mind as having bigger than usual allocations as well, because of big tables of code points (in the hundreds of KB, so still an order of magnitude or 2 less than the stress test).

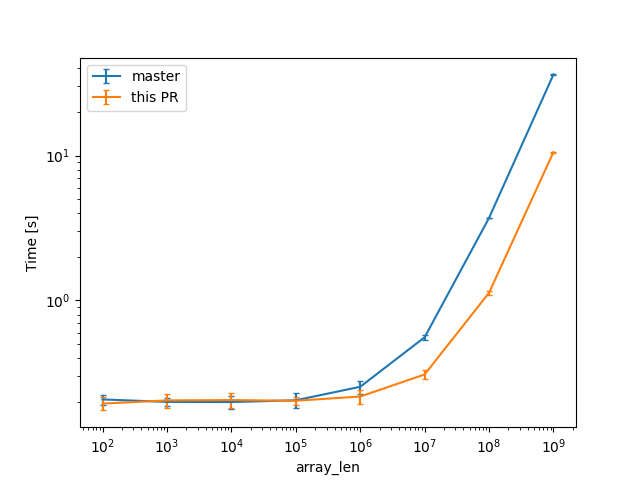

In a check build, for a single static array shown above, from 100 to 10^9 u64s (for lengths in powers of ten), the constant factors are lowered:

(log scales for easier comparisons)

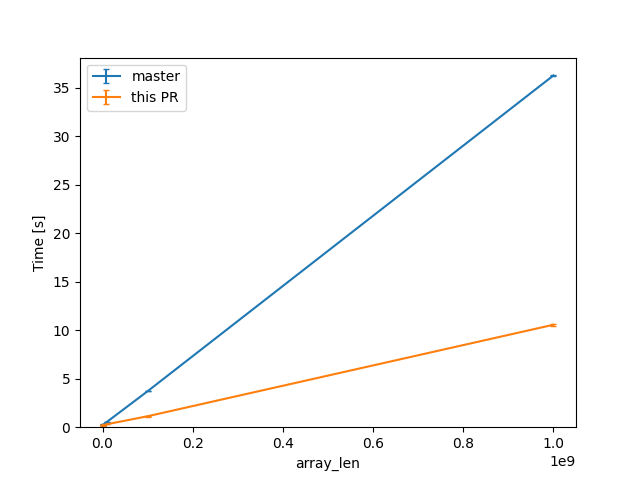

(linear scale for absolute diff at higher Ns)

For one of the alternatives of that issue

```rust

const ROWS: usize = 100_000;

const COLS: usize = 10_000;

static TWODARRAY: [[u128; COLS]; ROWS] = [[0; COLS]; ROWS];

```

we can see a similar reduction of around 3x (from 38s to 12s or so).

For the same size, the slowest case IIRC is when there are uninitialized bytes e.g. via padding

```rust

const ROWS: usize = 100_000;

const COLS: usize = 10_000;

static TWODARRAY: [[(u64, u8); COLS]; ROWS] = [[(0, 0); COLS]; ROWS];

```

then interning/walking does not dominate anymore (but means there is likely still some interesting work left to do here).

Compile times in this case rise up quite a bit, and avoiding interning walks has less impact: around 23%, from 730s on master to 568s with this PR.

Enable MIR inlining

Continuation of https://github.com/rust-lang/rust/pull/82280 by `@wesleywiser.`

#82280 has shown nice compile time wins could be obtained by enabling MIR inlining.

Most of the issues in https://github.com/rust-lang/rust/issues/81567 are now fixed,

except the interaction with polymorphization which is worked around specifically.

I believe we can proceed with enabling MIR inlining in the near future

(preferably just after beta branching, in case we discover new issues).

Steps before merging:

- [x] figure out the interaction with polymorphization;

- [x] figure out how miri should deal with extern types;

- [x] silence the extra arithmetic overflow warnings;

- [x] remove the codegen fulfilment ICE;

- [x] remove the type normalization ICEs while compiling nalgebra;

- [ ] tweak the inlining threshold.

interpret: make a comment less scary

This slipped past my review: "has no meaning" could be read as "is undefined behavior". That is certainly not what we mean so be more clear.

cleanup mir visitor for `rustc::pass_by_value`

by changing `& $($mutability)?` to `$(& $mutability)?`

I also did some formatting changes because I started doing them for the visit methods I changed and then couldn't get myself to stop xx, I hope that's still fairly easy to review.