CTFE interning: don't walk allocations that don't need it

The interning of const allocations visits the mplace looking for references to intern. Walking big aggregates like big static arrays can be costly, so we only do it if the allocation we're interning contains references or interior mutability.

Walking ZSTs was avoided before, and this optimization is now applied to cases where there are no references/relocations either.

---

While initially looking at this in the context of #93215, I've been testing with smaller allocations than the 16GB one in that issue, and with different init/uninit patterns (esp. via padding).

In that example, by default, `eval_to_allocation_raw` is the heaviest query followed by `incr_comp_serialize_result_cache`. So I'll show numbers when incremental compilation is disabled, to focus on the const allocations themselves at 95% of the compilation time, at bigger array sizes on these minimal examples like `static ARRAY: [u64; LEN] = [0; LEN];`.

That is a close construction to parts of the `ctfe-stress-test-5` benchmark, which has const allocations in the megabytes, while most crates usually have way smaller ones. This PR will have the most impact in these situations, as the walk during the interning starts to dominate the runtime.

Unicode crates (some of which are present in our benchmarks) like `ucd`, `encoding_rs`, etc come to mind as having bigger than usual allocations as well, because of big tables of code points (in the hundreds of KB, so still an order of magnitude or 2 less than the stress test).

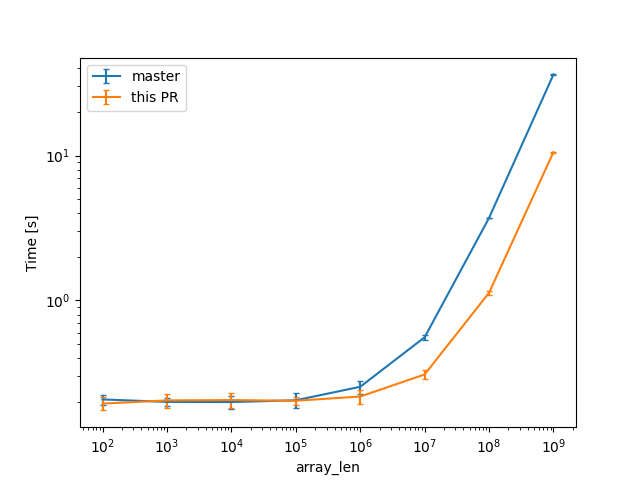

In a check build, for a single static array shown above, from 100 to 10^9 u64s (for lengths in powers of ten), the constant factors are lowered:

(log scales for easier comparisons)

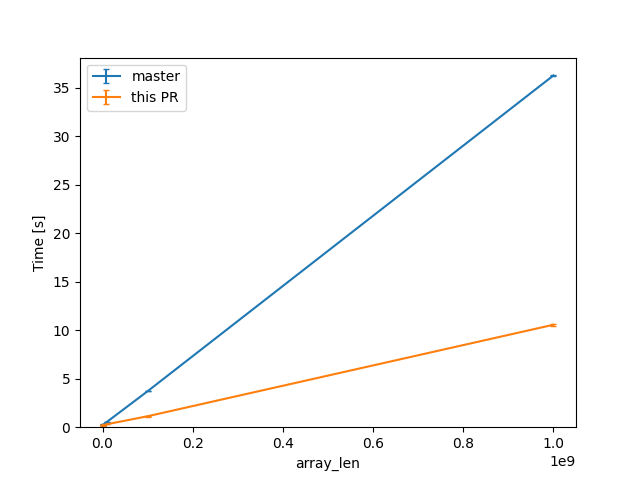

(linear scale for absolute diff at higher Ns)

For one of the alternatives of that issue

```rust

const ROWS: usize = 100_000;

const COLS: usize = 10_000;

static TWODARRAY: [[u128; COLS]; ROWS] = [[0; COLS]; ROWS];

```

we can see a similar reduction of around 3x (from 38s to 12s or so).

For the same size, the slowest case IIRC is when there are uninitialized bytes e.g. via padding

```rust

const ROWS: usize = 100_000;

const COLS: usize = 10_000;

static TWODARRAY: [[(u64, u8); COLS]; ROWS] = [[(0, 0); COLS]; ROWS];

```

then interning/walking does not dominate anymore (but means there is likely still some interesting work left to do here).

Compile times in this case rise up quite a bit, and avoiding interning walks has less impact: around 23%, from 730s on master to 568s with this PR.

Enable MIR inlining

Continuation of https://github.com/rust-lang/rust/pull/82280 by `@wesleywiser.`

#82280 has shown nice compile time wins could be obtained by enabling MIR inlining.

Most of the issues in https://github.com/rust-lang/rust/issues/81567 are now fixed,

except the interaction with polymorphization which is worked around specifically.

I believe we can proceed with enabling MIR inlining in the near future

(preferably just after beta branching, in case we discover new issues).

Steps before merging:

- [x] figure out the interaction with polymorphization;

- [x] figure out how miri should deal with extern types;

- [x] silence the extra arithmetic overflow warnings;

- [x] remove the codegen fulfilment ICE;

- [x] remove the type normalization ICEs while compiling nalgebra;

- [ ] tweak the inlining threshold.

interpret: make a comment less scary

This slipped past my review: "has no meaning" could be read as "is undefined behavior". That is certainly not what we mean so be more clear.

cleanup mir visitor for `rustc::pass_by_value`

by changing `& $($mutability)?` to `$(& $mutability)?`

I also did some formatting changes because I started doing them for the visit methods I changed and then couldn't get myself to stop xx, I hope that's still fairly easy to review.

Const eval no longer runs MIR optimizations so unless this is getting

run as part of a MIR optimization like const-prop, there can be unused

type parameters even if polymorphization is enabled.

interpret: add From<&MplaceTy> for PlaceTy

We have a similar instance for `&MPlaceTy` to `OpTy`. Also add the same for `&mut`.

This avoids having to write `&(*place).into()`, which we have a few times here and at least twice in Miri (and it comes up again in my current patch).

r? ```@oli-obk```

interpret: do not prune requires_caller_location stack frames quite so early

https://github.com/rust-lang/rust/pull/87000 made the interpreter skip `caller_location` frames for its stacktraces and `cur_span`. However, those functions are used for much more than just panic reporting, and e.g. when Miri reports UB somewhere, it probably wants to point inside `caller_location` frames. (And if it did not, it would want to have its own logic to decide that, not be forced into it by the core interpreter engine.) This fixes some rare ICEs in Miri that say "we should never pop more than one frame at once".

So let's remove all `caller_location` logic from the core interpreter, and instead move it to CTFE error reporting. This does not change user-visible behavior. That's the first commit.

We might additionally want to change CTFE error reporting to treat panics differently from other errors: only prune `caller_location` frames for panics. The second commit does that. But honestly I am not sure if this is an improvement.

r? ``@oli-obk``

Checking the size/alignment of an mplace may be costly, so we only do it

on the types where the walk we want to avoid could be expensive: the larger types

like arrays and slices, rather than on all aggregates being interned.

Reorganizes the previous commits to have a single exit-point to avoid doing the

potentially costly walk. Also moves the relocations tests before the interior

mutability test: only references are important when checking for `UnsafeCell`s

and we're checking if there are any to decide to avoid the walk anyways.

The interning of const allocations visits the mplace looking for references

to intern. Walking big aggregates like big static arrays can be costly,

so we only do it if the allocation we're interning contains references

or interior mutability.

Walking ZSTs was avoided before, and this optimization is now applied

to cases where there are no references/relocations either.

We now have an infallible function that also tells us which kind of allocation we are talking about.

Also we do longer have to distinguish between data and function allocations for liveness.

Remove dereferencing of Box from codegen

Through #94043, #94414, #94873, and #95328, I've been fixing issues caused by Box being treated like a pointer when it is not a pointer. However, these PRs just introduced special cases for Box. This PR removes those special cases and instead transforms a deref of Box into a deref of the pointer it contains.

Hopefully, this is the end of the Box<T, A> ICEs.

The current code is a basis for `is_const_fn_raw`, and `impl_constness`

is no longer a valid name, which is previously used for determining the

constness of impls, and not items in general.

And likewise for the `Const::val` method.

Because its type is called `ConstKind`. Also `val` is a confusing name

because `ConstKind` is an enum with seven variants, one of which is

called `Value`. Also, this gives consistency with `TyS` and `PredicateS`

which have `kind` fields.

The commit also renames a few `Const` variables from `val` to `c`, to

avoid confusion with the `ConstKind::Value` variant.

Remove unnecessary `to_string` and `String::new`

73fa217bc1 changed the type of the `suggestion` argument to `impl ToString`. This patch removes unnecessary `to_string` and `String::new`.

cc: `````@davidtwco`````

interpret: unify offset_from check with offset check

`offset` does the check with a single `check_ptr_access` call while `offset_from` used two calls. Make them both just one one call.

I originally intended to actually factor this into a common function, but I am no longer sure if that makes a lot of sense... the two functions start with pretty different precondition (e.g. `offset` *knows* that the 2nd pointer has the same provenance).

I also reworded the UB messages a little. Saying it "cannot" do something is not how we usually phrase UB (as far as I know). Instead it's not *allowed* to do that.

r? ``````@oli-obk``````

use precise spans for recursive const evaluation

This fixes https://github.com/rust-lang/rust/issues/73283 by using a `TyCtxtAt` with a more precise span when the interpreter recursively calls itself. Hopefully such calls are sufficiently rare that this does not cost us too much performance.

(In theory, cycles can also arise through layout computation, as layout can depend on consts -- but layout computation happens all the time so we'd have to do something to not make this terrible for performance.)