I don't like our macro tests -- they are brittle and don't inspire

confidence. I think the reason for that is that we try to unit-test

them, but that is at odds with reality, where macro expansion

fundamentally depends on name resolution.

Consider these expples

{ 92 }

async { 92 }

'a: { 92 }

#[a] { 92 }

Previously the tree for them were

BLOCK_EXPR

{ ... }

EFFECT_EXPR

async

BLOCK_EXPR

{ ... }

EFFECT_EXPR

'a:

BLOCK_EXPR

{ ... }

BLOCK_EXPR

#[a]

{ ... }

As you see, it gets progressively worse :) The last two items are

especially odd. The last one even violates the balanced curleys

invariant we have (#10357) The new approach is to say that the stuff in

`{}` is stmt_list, and the block is stmt_list + optional modifiers

BLOCK_EXPR

STMT_LIST

{ ... }

BLOCK_EXPR

async

STMT_LIST

{ ... }

BLOCK_EXPR

'a:

STMT_LIST

{ ... }

BLOCK_EXPR

#[a]

STMT_LIST

{ ... }

FragmentKind played two roles:

* entry point to the parser

* syntactic category of a macro call

These are different use-cases, and warrant different types. For example,

macro can't expand to visibility, but we have such fragment today.

This PR introduces `ExpandsTo` enum to separate this two use-cases.

I suspect we might further split `FragmentKind` into `$x:specifier` enum

specific to MBE, and a general parser entry point, but that's for

another PR!

9970: feat: Implement attribute input token mapping, fix attribute item token mapping r=Veykril a=Veykril

The token mapping for items with attributes got overwritten partially by the attributes non-item input, since attributes have two different inputs, the item and the direct input both.

This PR gives attributes a second TokenMap for its direct input. We now shift all normal input IDs by the item input maximum(we maybe wanna swap this see below) similar to what we do for macro-rules/def. For mapping down we then have to figure out whether we are inside the direct attribute input or its item input to pick the appropriate mapping which can be done with some token range comparisons.

Fixes https://github.com/rust-analyzer/rust-analyzer/issues/9867

Co-authored-by: Lukas Wirth <lukastw97@gmail.com>

We generally avoid "syntax only" helper wrappers, which don't do much:

they make code easier to write, but harder to read. They also make

investigations harder, as "find_usages" needs to be invoked both for the

wrapped and unwrapped APIs

9260: tree-wide: make rustdoc links spiky so they are clickable r=matklad a=lf-

Rustdoc was complaining about these while I was running with --document-private-items and I figure they should be fixed.

Co-authored-by: Jade <software@lfcode.ca>

8560: Escape characters in doc comments in macros correctly r=jonas-schievink a=ChayimFriedman2

Previously they were escaped twice, both by `.escape_default()` and the debug view of strings (`{:?}`). This leads to things like newlines or tabs in documentation comments being `\\n`, but we unescape literals only once, ending up with `\n`.

This was hard to spot because CMark unescaped them (at least for `'` and `"`), but it did not do so in code blocks.

This also was the root cause of #7781. This issue was solved by using `.escape_debug()` instead of `.escape_default()`, but the real issue remained.

We can bring the `.escape_default()` back by now, however I didn't do it because it is probably slower than `.escape_debug()` (more work to do), and also in order to change the code the least.

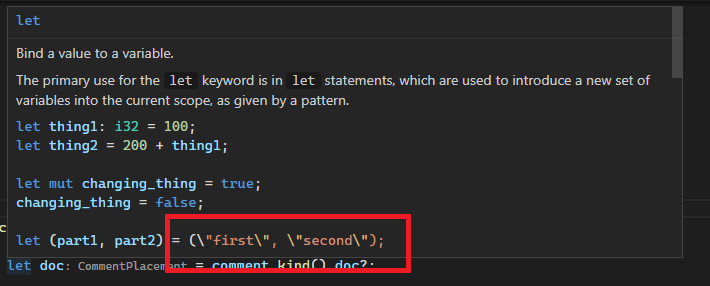

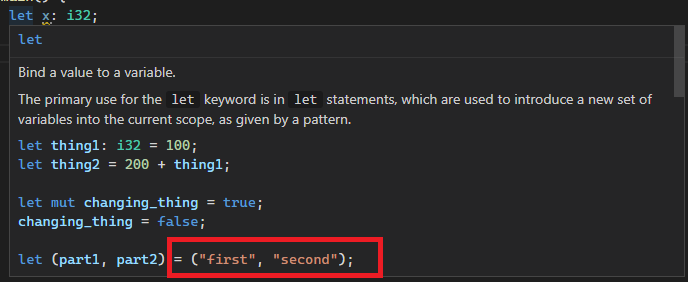

Example (the keyword and primitive docs are `include!()`d at https://doc.rust-lang.org/src/std/lib.rs.html#570-578, and thus originate from macro):

Before:

After:

Co-authored-by: Chayim Refael Friedman <chayimfr@gmail.com>

Previously they were escaped twice, both by `.escape_default()` and the debug view of strings (`{:?}`). This leads to things like newlines or tabs in documentation comments being `\\n`, but we unescape literals only once, ending up with `\n`.

This was hard to spot because CMark unescaped them (at least for `'` and `"`), but it did not do so in code blocks.

This also was the root cause of #7781. This issue was solved by using `.escape_debug()` instead of `.escape_default()`, but the real issue remained.

We can bring the `.escape_default()` back by now, however I didn't do it because it is probably slower than `.escape_debug()` (more work to do), and also in order to change the code the least.

use vec![] instead of Vec::new() + push()

avoid redundant clones

use chars instead of &str for single char patterns in ends_with() and starts_with()

allocate some Vecs with capacity to avoid unneccessary resizing

7994: Speed up mbe matching in heavy recursive cases r=edwin0cheng a=edwin0cheng

In some cases (e.g. #4186), mbe matching is very slow due to a lot of copy and allocation for bindings, this PR try to solve this problem by introduce a semi "link-list" approach for bindings building.

I used this [test case](https://github.com/weiznich/minimal_example_for_rust_81262) (for `features(32-column-tables)`) to run following command to benchmark:

```

time rust-analyzer analysis-stats --load-output-dirs ./

```

Before this PR : 2 mins

After this PR: 3 seconds.

However, for 64-column-tables cases, we still need 4 mins to complete.

I will try to investigate in the following weeks.

bors r+

Co-authored-by: Edwin Cheng <edwin0cheng@gmail.com>

7211: Fixed expr meta var after path colons in mbe r=matklad a=edwin0cheng

Fixes#7207

Added `L_DOLLAR` in `ITEM_RECOVERY_SET` , but I don't know whether it is a good idea.

r? @matklad

Co-authored-by: Edwin Cheng <edwin0cheng@gmail.com>

7145: Proper handling $crate Take 2 [DO NOT MERGE] r=edwin0cheng a=edwin0cheng

Similar to previous PR (#7133) , but improved the following things :

1. Instead of storing the whole `ExpansionInfo`, we store a similar but stripped version `HygieneInfo`.

2. Instread of storing the `SyntaxNode` (because every token we are interested are IDENT), we store the `TextRange` only.

3. Because of 2, we now can put it in Salsa.

4. And most important improvement: Instead of computing the whole frames every single time, we compute it recursively through salsa: (Such that in the best scenario, we only need to compute the first layer of frame)

```rust

let def_site = db.hygiene_frame(info.def.file_id);

let call_site = db.hygiene_frame(info.arg.file_id);

HygieneFrame { expansion: Some(info), local_inner, krate, call_site, def_site }

```

The overall speed compared to previous PR is much faster (65s vs 45s) :

```

[WITH old PR]

Database loaded 644.86ms, 284mi

Crates in this dir: 36

Total modules found: 576

Total declarations: 11153

Total functions: 8715

Item Collection: 15.78s, 91562mi

Total expressions: 240721

Expressions of unknown type: 2635 (1%)

Expressions of partially unknown type: 2064 (0%)

Type mismatches: 865

Inference: 49.84s, 250747mi

Total: 65.62s, 342310mi

rust-analyzer -q analysis-stats . 66.72s user 0.57s system 99% cpu 1:07.40 total

[WITH this PR]

Database loaded 665.83ms, 284mi

Crates in this dir: 36

Total modules found: 577

Total declarations: 11188

Total functions: 8743

Item Collection: 15.28s, 84919mi

Total expressions: 241229

Expressions of unknown type: 2637 (1%)

Expressions of partially unknown type: 2064 (0%)

Type mismatches: 868

Inference: 30.15s, 135293mi

Total: 45.43s, 220213mi

rust-analyzer -q analysis-stats . 46.26s user 0.74s system 99% cpu 47.294 total

```

*HOWEVER*, it is still a perf regression (35s vs 45s):

```

[WITHOUT this PR]

Database loaded 657.42ms, 284mi

Crates in this dir: 36

Total modules found: 577

Total declarations: 11177

Total functions: 8735

Item Collection: 12.87s, 72407mi

Total expressions: 239380

Expressions of unknown type: 2643 (1%)

Expressions of partially unknown type: 2064 (0%)

Type mismatches: 868

Inference: 22.88s, 97889mi

Total: 35.74s, 170297mi

rust-analyzer -q analysis-stats . 36.71s user 0.63s system 99% cpu 37.498 total

```

Co-authored-by: Edwin Cheng <edwin0cheng@gmail.com>