Merge #10127

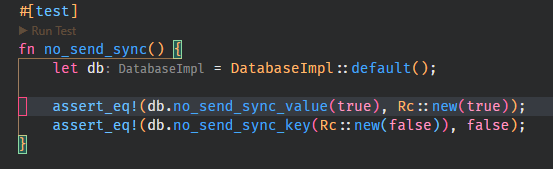

10127: fix: When descending tokens don't bail on failed macro call expansions r=Veykril a=Veykril (with `#[test]` expansion enabled)  The problem was pretty simple, since we go through the ancestors we first try to expand the macro call node we are in since in attributed items these are valid syntaxnodes instead of TokenTrees, we then fail the expansion since the expansion only exists in the attribute expanded file and therefor skip the attribute expansion due to returning immediately. So instead of breaking out we just continue looking up the ancestors. Fixes https://github.com/rust-analyzer/rust-analyzer/issues/10115 bors r+ Co-authored-by: Lukas Wirth <lukastw97@gmail.com>

This commit is contained in:

commit

796bfccac4

@ -467,65 +467,73 @@ impl<'db> SemanticsImpl<'db> {

|

||||

let mut queue = vec![InFile::new(sa.file_id, token)];

|

||||

let mut cache = self.expansion_info_cache.borrow_mut();

|

||||

let mut res = smallvec![];

|

||||

// Remap the next token in the queue into a macro call its in, if it is not being remapped

|

||||

// either due to not being in a macro-call or because its unused push it into the result vec,

|

||||

// otherwise push the remapped tokens back into the queue as they can potentially be remapped again.

|

||||

while let Some(token) = queue.pop() {

|

||||

self.db.unwind_if_cancelled();

|

||||

|

||||

let was_not_remapped = (|| {

|

||||

for node in token.value.ancestors() {

|

||||

match_ast! {

|

||||

match node {

|

||||

ast::MacroCall(macro_call) => {

|

||||

let tt = macro_call.token_tree()?;

|

||||

let l_delim = match tt.left_delimiter_token() {

|

||||

Some(it) => it.text_range().end(),

|

||||

None => tt.syntax().text_range().start()

|

||||

};

|

||||

let r_delim = match tt.right_delimiter_token() {

|

||||

Some(it) => it.text_range().start(),

|

||||

None => tt.syntax().text_range().end()

|

||||

};

|

||||

if !TextRange::new(l_delim, r_delim).contains_range(token.value.text_range()) {

|

||||

return None;

|

||||

}

|

||||

let file_id = sa.expand(self.db, token.with_value(¯o_call))?;

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), None, token.as_ref())?;

|

||||

if let Some(macro_call) = ast::MacroCall::cast(node.clone()) {

|

||||

let tt = match macro_call.token_tree() {

|

||||

Some(tt) => tt,

|

||||

None => continue,

|

||||

};

|

||||

let l_delim = match tt.left_delimiter_token() {

|

||||

Some(it) => it.text_range().end(),

|

||||

None => tt.syntax().text_range().start(),

|

||||

};

|

||||

let r_delim = match tt.right_delimiter_token() {

|

||||

Some(it) => it.text_range().start(),

|

||||

None => tt.syntax().text_range().end(),

|

||||

};

|

||||

if !TextRange::new(l_delim, r_delim)

|

||||

.contains_range(token.value.text_range())

|

||||

{

|

||||

continue;

|

||||

}

|

||||

let file_id = match sa.expand(self.db, token.with_value(¯o_call)) {

|

||||

Some(file_id) => file_id,

|

||||

None => continue,

|

||||

};

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), None, token.as_ref())?;

|

||||

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

},

|

||||

ast::Item(item) => {

|

||||

if let Some(call_id) = self.with_ctx(|ctx| ctx.item_to_macro_call(token.with_value(item.clone()))) {

|

||||

let file_id = call_id.as_file();

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), Some(item), token.as_ref())?;

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

} else if let Some(item) = ast::Item::cast(node.clone()) {

|

||||

if let Some(call_id) = self

|

||||

.with_ctx(|ctx| ctx.item_to_macro_call(token.with_value(item.clone())))

|

||||

{

|

||||

let file_id = call_id.as_file();

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), Some(item), token.as_ref())?;

|

||||

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

},

|

||||

_ => {}

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

}

|

||||

}

|

||||

}

|

||||

None

|

||||

})().is_none();

|

||||

})()

|

||||

.is_none();

|

||||

if was_not_remapped {

|

||||

res.push(token.value)

|

||||

}

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user